Integrating Semantic Segmentation and Retinex Model for Low Light Image Enhancement

Minhao Fan, Wenjing Wang, Wenhan Yang and Jiaying Liu

Proceedings of the 28th ACM International Conference on Multimedia (MM '20)

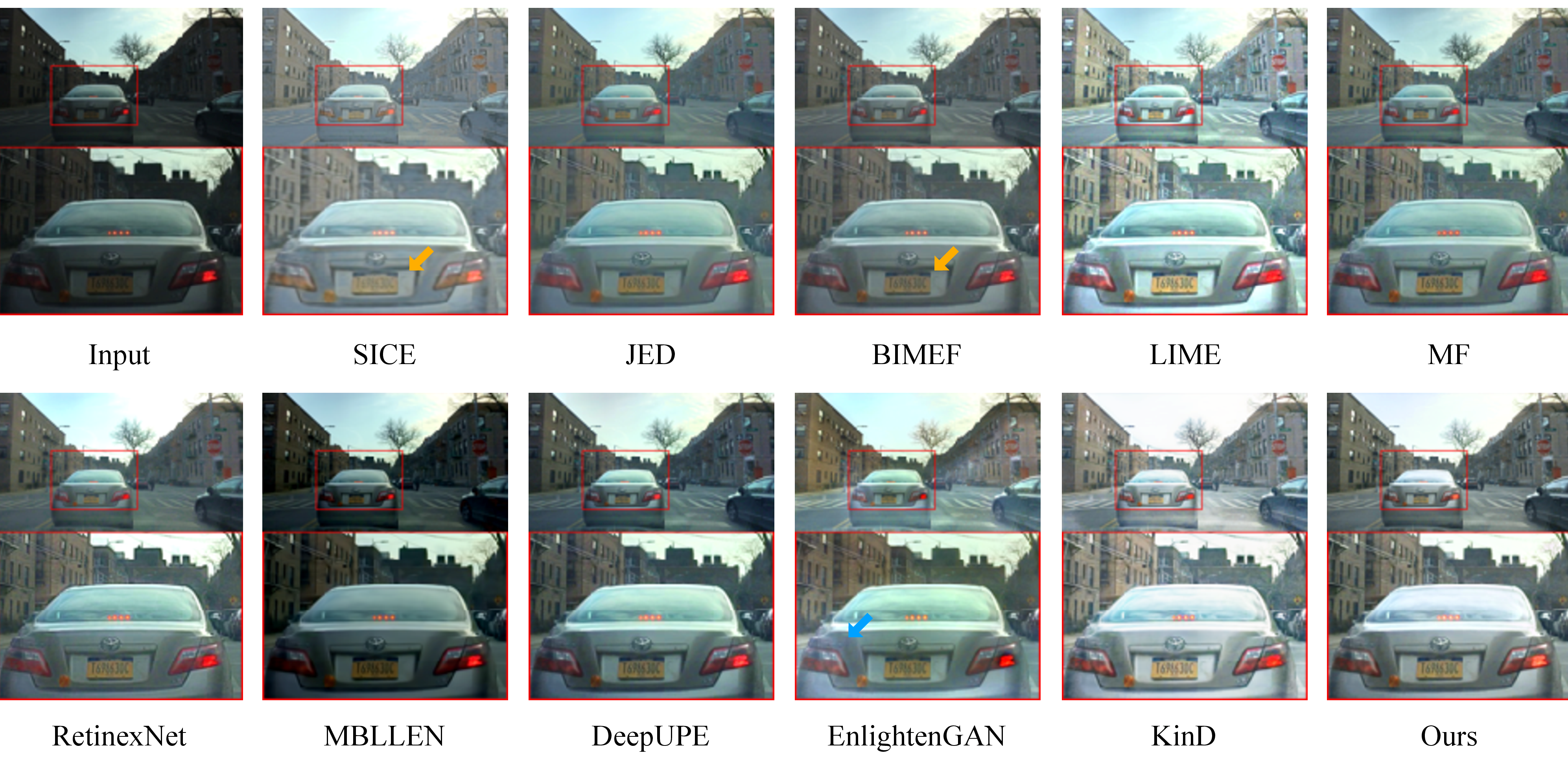

Figure. Comparison results of low-light enhancement. Detail blurriness and weird artifacts are pointed by yellow and blue arrows, respectively.

Abstract

Retinex model is widely adopted in various low-light image enhancement tasks. The basic idea of the Retinex theory is to decompose images into reflectance and illumination. The ill-posed decomposition is usually handled by hand-crafted constraints and priors. With the recently emerging deep-learning based approaches as tools, in this paper, we integrate the idea of Retinex decomposition and semantic information awareness. Based on the observation that various objects and backgrounds have different material, reflection and perspective attributes, regions of a single low-light image may require different adjustment and enhancement regarding contrast, illumination and noise. We propose an enhancement pipeline with three parts that effectively utilize the semantic layer information. Specifically, we extract the segmentation, reflectance as well as illumination layers, and concurrently enhance every separate region, i.e. sky, ground and objects for outdoor scenes. Extensive experiments on both synthetic data and real world images demonstrate the superiority of our method over current state-of-the-art low-light enhancement algorithms.